OpenSurfaces: A Richly Annotated Catalog of Surface Appearance

Sean Bell, Paul Upchurch, Noah Snavely, Kavita Bala

Cornell University

ACM Transactions on Graphics (SIGGRAPH 2013)

Paper (49MB PDF) Supplemental (13MB PDF) Slides (273M Keynote '09)

Abstract:

The appearance of surfaces in real-world scenes is determined by the materials, textures, and context in which the surfaces appear. However, the datasets we have for visualizing and modeling rich surface appearance in context, in applications such as home remodeling, are quite limited. To help address this need, we present OpenSurfaces, a rich, labeled database consisting of thousands of examples of surfaces segmented from consumer photographs of interiors, and annotated with material parameters (reflectance, material names), texture information (surface normals, rectified textures), and contextual information (scene category, and object names).

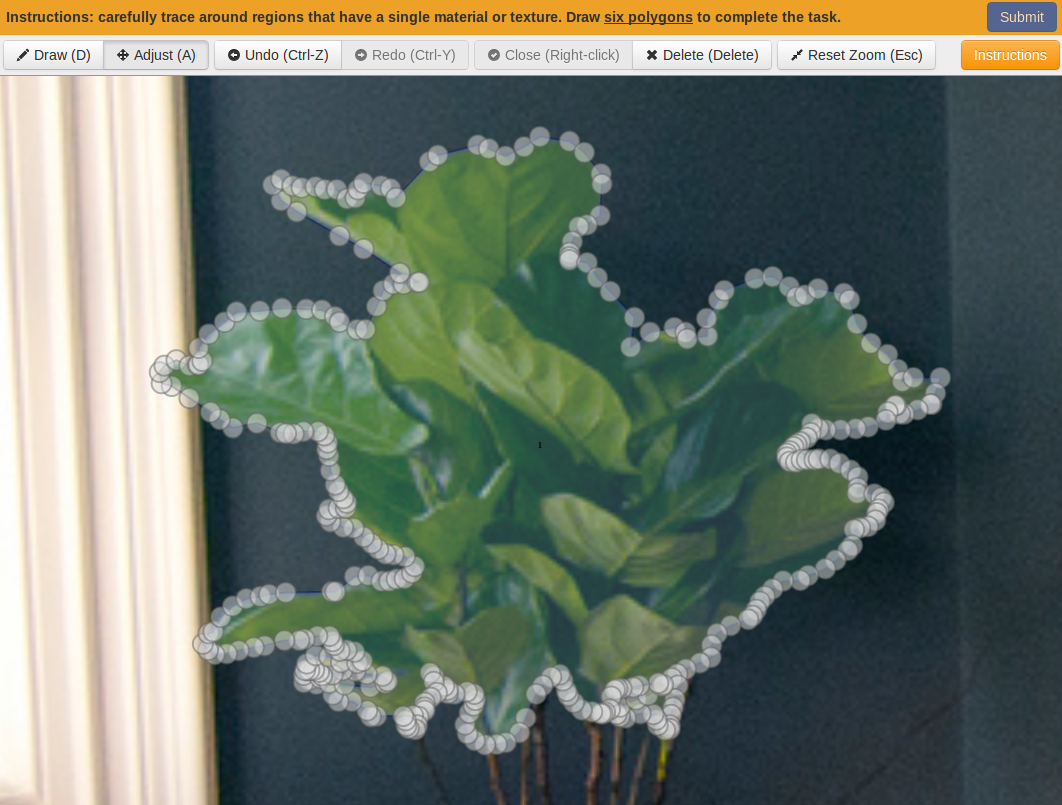

Retrieving usable surface information from uncalibrated Internet photo collections is challenging. We use human annotations and present a new methodology for segmenting and annotating materials in Internet photo collections suitable for crowdsourcing (e.g., through Amazon’s Mechanical Turk). Because of the noise and variability inherent in Internet photos and novice annotators, designing this annotation engine was a key challenge; we present a multi-stage set of annotation tasks with quality checks and validation. We demonstrate the use of this database in proof-of-concept applications including surface retexturing and material and image browsing, and discuss future uses. OpenSurfaces is a public resource available at http://opensurfaces.cs.cornell.edu/.

Video:

BibTeX:

@article{bell13opensurfaces,

author = "Sean Bell and Paul Upchurch and Noah Snavely and Kavita Bala",

title = "Open{S}urfaces: A Richly Annotated Catalog of Surface Appearance",

journal = "ACM Trans. on Graphics (SIGGRAPH)",

volume = "32",

number = "4",

year = "2013",

}Download the dataset

Download the dataset and helper script (below), unzip the dataset,

and then run the script: python process_opensurfaces_release_0.py

The "helper script" parses the JSON in "Release 0" and automatically downloads all images for the dataset, as well as generates scene-parsing style images (where the pixel encodes the material/object/scene class). It also generates CSV files which should be much more convenient than the original JSON files. See the documentation inside the script for more details.

Release 0 (1.1G) Helper script (25K)

Download the full crowdsourcing platform

Our platform now includes both OpenSurfaces and Intrinsic Images in the Wild.

Code (Github repository) Documentation

Download the segmentation user interface

This repository contains our segmentation user interface extracted as a lightweight tool. A dummy server backend is included to run the demo.

License

The annotations are licensed under a Creative Commons Attribution 4.0 International License. The photos have their own licenses.

Acknowledgements

This work was supported by the National Science Foundation (grants IIS-1161645, NSF-1011919, and NSF-1149393) and the Intel Science and Technology Center for Visual Computing. We are grateful to Albert Liu and Keven Matzen, and to Kevin for the paper video. We also thank our MTurk workers, and Patti Steinbacher in particular. In the supplementary material, we acknowledge the Flickr users who released their images under Creative Commons licenses. Finally, we thank the reviewers for their valuable comments.

Computing resources for this project were provided by AWS for Education.

Header background pattern: courtesy of Subtle Patterns.